A*STAR - Institute for Infocomm Research

Data Scientist Intern

May 2021 - Dec 2021

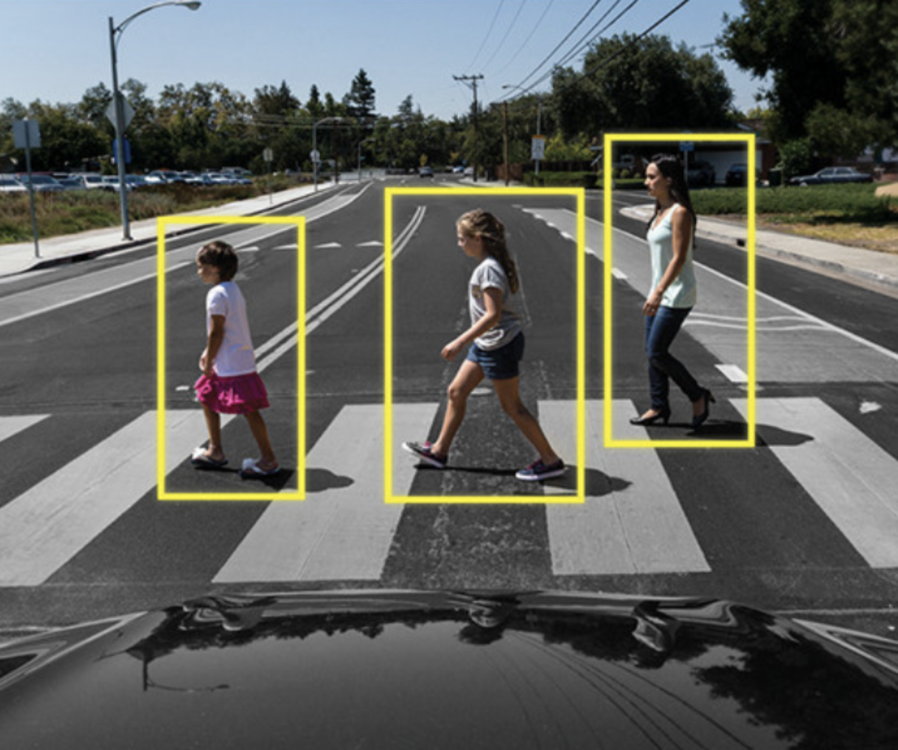

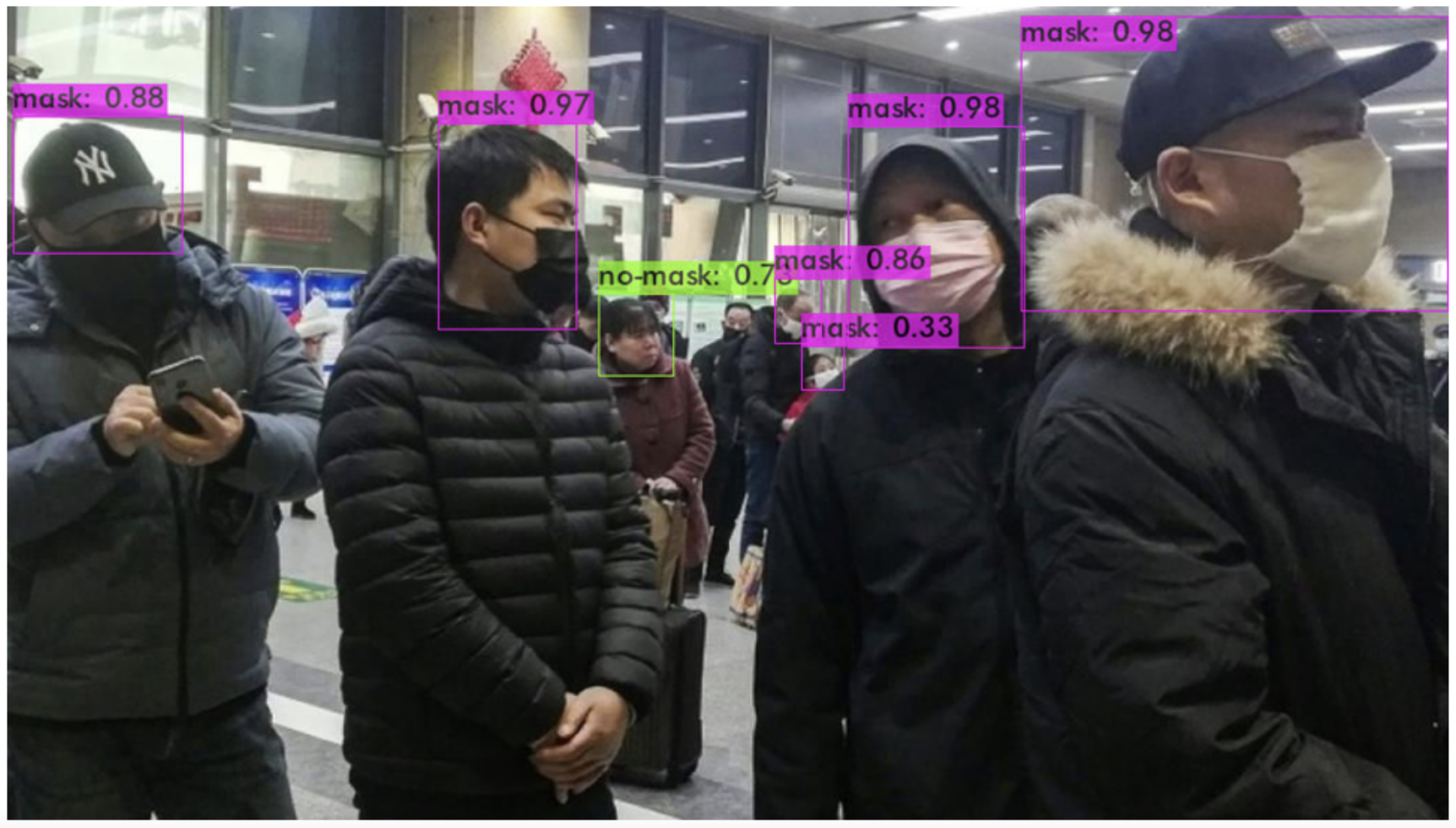

Worked on a vision-based 2D object detection system for Autonomous Service

Robots in Hospitals, through a Semi-Supervised Learning Approach.

Oversaw the training and optimization for SSD-MobileNetv2, STAC, and Unbiased Mean Teacher

Model to assess Semi-Supervised and Incremental Learning efficacy.

Publications

International Conference on Social Robotics (ICSR) 2021

Pahwa, R. S., Chang, R., Jie, W., Satini, S., Viswanathan, C., Yiming, D., Jain, V., Pang, C. T.,

& Wah, W. K. (1970, January 1). A survey on object detection performance with different

data distributions. https://link.springer.com/chapter/10.1007/978-3-030-90525-5_48"

Conference on Learning Factories (CLF) 2022

Chang, R., Pahwa, R. S., Wang, J., Chen, L.,Satini, S.,Wan, K. W., & Hsu, D. (2022, April 7).

Creating semi-supervised learning-based Adaptable Object Detection Models for

Autonomous Service Robot. SSRN.

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4075994